A Grain Of

Rendering Shenzhen's Techno-Natural Materialities Audible+Visible

2024.4--2024.12

Project Members

Enza Migliore

Marcel Zaes Sagesser

Hanyu Qu

Yaohan Zhang | Yujing Ma

Yiyuan Bai | Bo Dong

Zhiyi Zhang | Zhaorui Liu

Minghim Tong | Simeng Wang

Zichun Xia | Zhonghui Tang

MY ROLE

Hardware Development

Sound Visualization

Audio Editing

TOOLS

Microphones

Loudspeakers & Transducers

DFPlayer Mini

Sonic Visualiser

SURPPOTED BY

Sound Studies Group

"A Grain Of" is an ongoing technology-driven project that aims to read and sense emerging urban environments through their materiality, in the example of Shenzhen. The complexity of the city is rendered through conceptual manipulation of data collected over eight weeks of extensive fieldwork at three exemplary locations: a mountainous urban forest, an under-construction high-tech area on reclaimed land, and a densely populated, historical retail area. The collected materials, both physical and digital, show how the matter we live with daily are assemblages shaped by human, geological, industrial, and technological forces, characterized with the specifics of Shenzhen. The idiosyncratic, interdisciplinary approach allows this project to expand beyond physical matter to sonic, magnetic, vibrational, and digital artifacts. Interdisciplinary methods of speculative design and scientific data re-interpretation are then combined with advanced manufacturing and digital media technologies. To date, this project has resulted in two scholarly publications and three art installations, each of which marked with a distinct subtitle. The art installations aim at bringing the audience quite literally "into" a small grain of the city of Shenzhen, by rendering its materiality sensible on a micro scale. Thereby, the invisible, inaudible, and unnoticeable is augmented into a fictional display of the city's smallest "grains".

Fieldwork Recording

This project uses multiple microphones to collect sound:

RODE BLIMP shotgun

Sennheiser AMBEO VR MIC

AKG C411 PP

Zoom H3-VR

WILDLIFE ACOUSTICS

Sound Visualization - Spectrogram

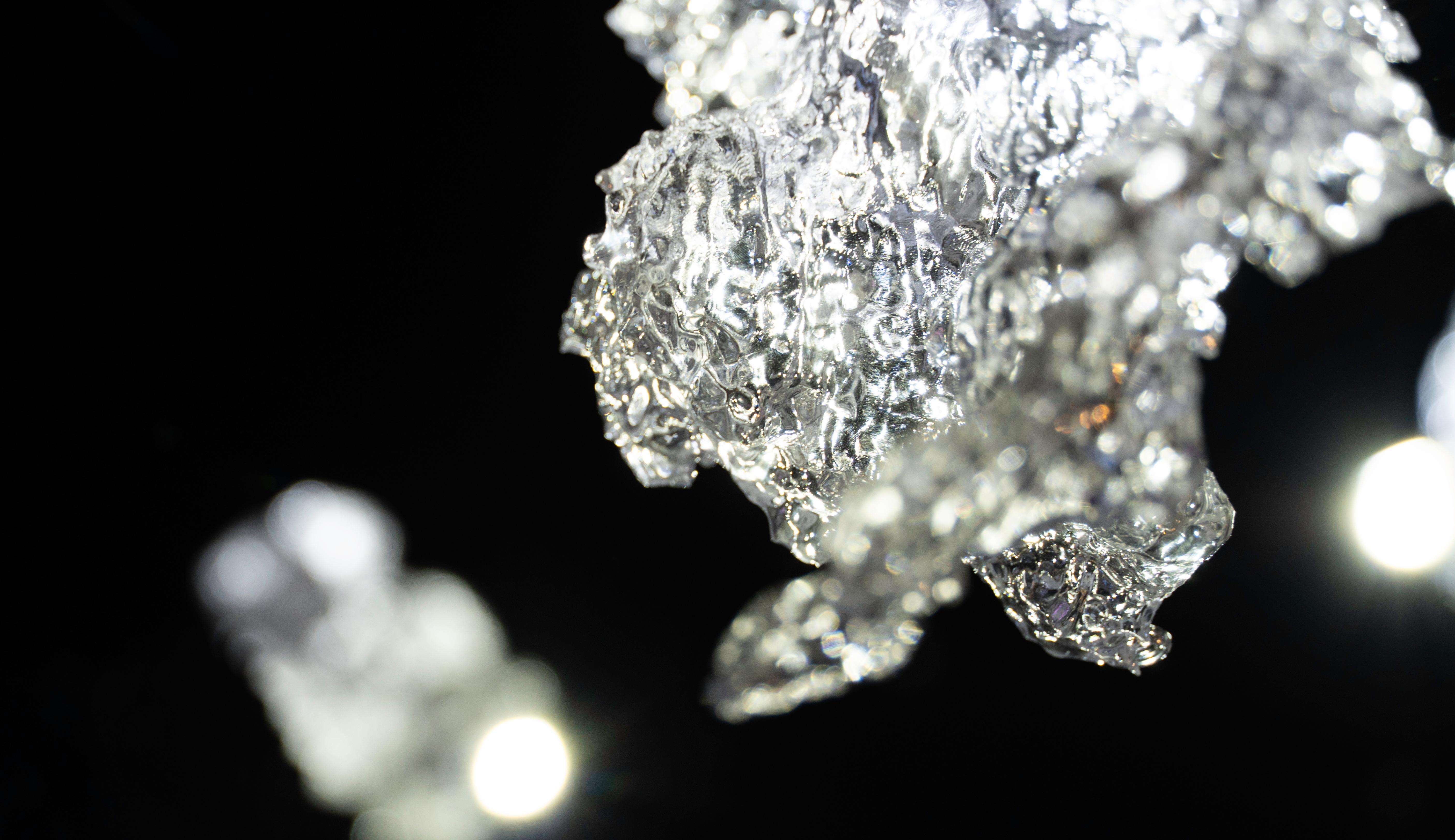

Each recording undergoes detailed processing to extract nuanced details, ranging from the gentle flow of water streams to the bustling noise of construction activities that portrait human traces. Following the completion of sound sampling, recordings are analyzed using Sonic Visualiser(a software that quantifies and analyzes sound to image), which generates chromatogram and black-and-white spectrogram images derived from the level values of the audio samples. These images are then employed as procedural textures in Blender to texture our collected sample 3D models. The primary mesh is physically displaced by the power of frequency and the highest values are adopted as a falloff map and marked by a different shader. Now, we have 25 unique sound matter that exhibit crystallized surfaces, physically embody the sound textures, while preserving the original sample structure. We decided to print these objects with 3d printing materials of black resin and transparent resin.

Hardware Integration

To foster interaction between 3d-printed crystallized objects and their audience, integrating sound speakers and vibration motors further demonstrate the relationship between materialities and sound. We use DFPlayer Mini and integrated circuits to simultaneously control multiple speakers and transducers to produce different sounds, and these sound modules are stably embedded in 3D printed objects. Each sound module will play sound samples corresponding to the object.

Related Papers

E. Migliore, M. Sagesser, Ma Y.(2024) The Grain No. 12/11 W4 102 1 From the City: Integrating a 3D Model with Audio Data as an Experimental Creative Method. Proceeding of International Symposium on Visual Information Communication and Interaction(VINCI) 2024, 9-13 December, Taiwan, China. doi: doi.org/10.1145/3678698.3687179